It all began with DALL-E, a machine learning model developed by OpenAI to generate digital images from natural language descriptions. It is based on GPT-3, a language model using deep learning to produce human-like text, which was modified to generate images. It was soon followed by DALL-E 2 which is supposed to generate more realistic images at higher resolutions. As of 20 July 2022, DALL-E 2 entered into an invitation-based beta test, is closed source and not open to the public.

But on August 22, 2022, the AI art community was shaken by the release of an open-source alternative, Stable Diffusion, which you can install and run locally using modest hardware. Within days, PCWorld described it as the new killer app. And it surely is.

How to test it

The easiest way to test the Stable Diffusion model is by registering a free account at DreamStudio, although image creation is not free. But as of now, you will receive a free starting credit of 2 £ with which you can create 200 images. After that, every image costs one pence. Image creation is very fast, most probably much faster than you will ever achieve on your own computer.

If you want to use it on your own computer and play around with the code, you can download it as a Python package called “diffusers”. The process is described in detail in this blog post. The code is constantly updated and optimized, almost daily at the moment. Especially the hardware constraints are constantly lowered. Images in the model’s standard resolution of 500 by 500 pixels can now easily be created with a CUDA-enabled Nvidia graphics card with 4 GB of memory. A CPU-only approach is possible but takes much longer. So if you want the latest and greatest, check the git repository and install the Python package directly by using

pip install -U git+https://github.com/huggingface/diffusers

In the meantime, a lot of GUIs for different platforms have emerged, a lot more than can be easily listed here. A quick search for “Stable Diffusion GUI” will already show a lot of installable and web-based solutions.

How it works

The image creation is based on a handful of parameters, especially the textual description, called a prompt, and a random start value. All parameters equal, the model’s output is strictly deterministic: the same values will produce the same image. The following gallery shows some self-created examples, with the used text description shown underneath in the lightbox view.

The model is trained on a huge data set of pictures and their descriptions (LAION-5B). The quality and type of the output are heavily dependent on the keywords used in the image prompts. A lot of people are now trying to find out which combination of which keywords gives the results they want to achieve., e.g. in this Reddit channel where examples are shown and discussed. Prompts can get very long while trying to be very specific to create a certain output.

So, can you get every image content by just describing it? Certainly not. There are a few problems that we will discuss here. First and foremost, most of the results of the model are just plain unusable garbage. People try to remedy that by creating lots and lots of variations by iterating over the random starting value mentioned above. Then they pick the most promising results and try to optimize them by adjusting the other free parameters and the prompt. While this sounds like a lot of work, the results are often stunning, sometimes at the first few tries. The appeal is that you can achieve results on your own computer you would never be able to create yourself with pen, color, and paper or your favorite image creation software.

Another problem is, that the model doesn’t “understand” the content it’s creating, especially the anatomy of the human body. So you will see humans with three legs, three arms, six fingers, hands without a thumb, a lot of misproportions, and, especially, eyes that are completely strange and unnatural. As you looked at the last image of the image gallery above, you might have already thought that the result is unacceptable.

Another AI to the rescue

For several years now there were efforts to use AI models to optimize computer images, e.g. the most prominent was Adobe Sensei, but there are already a lot of others, mostly to be able to enlarge images and still keep up the quality. But this is not the zoom-and-enhance-button from CSI and information can’t be invented by the AI but just be guessed. That said, the guesses are often surprisingly good.

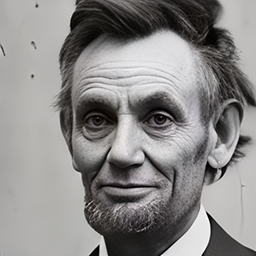

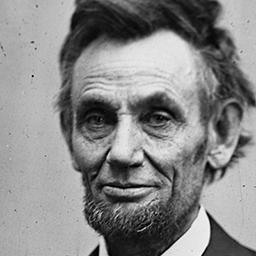

So to address the problem of the uncanny eyes, people resort to AI models that can optimize and reconstruct images. A very popular one is GFP-GAN, which we will discuss and use here. This model aims at the face restoration of old and low-quality photos and does a very good job. As the scope is just the faces and not the background, you can automatically include the usage of another AI model, Real-ESRGAN, to enhance also the background. Additionally, the model usually enlarges the image, while keeping up the quality.

The result can be seen here. Move the slider to the right to see the optimizations of the eyes, the teeth, and the skin. As additional examples, you can see what GFPGAN can do with old photographs and old paintings.

As a conclusion, the efforts and the development that the release of Stable Diffusion has sparked just two weeks after its initial release is stunning. There are daily changes and optimizations and the impact of this new tool on the AI art community and the art community as a whole is not really foreseeable. We can just say: we ain’t seen nothing yet.

![a portrait of intergalactic [name], symmetric, grim - lighting, high - contrast, intricate, elegant, highly detailed, centered, digital painting, artstation, concept art, smooth, sharp focus, illustration, artgerm, tomasz alen kopera, peter mohrbacher, donato giancola, joseph christian leyendecker, wlop, boris vallejo](https://d3v.one/wp-content/uploads/stable_diffusion.jpg)